30/10/2025

30/10/2025

NEW YORK, Oct 30: AI-generated videos are becoming increasingly realistic, challenging viewers to distinguish between real footage and computer-generated content, experts say. OpenAI’s Sora app, including the new Sora 2 social media model, has intensified concerns about deepfakes and misinformation.

Sora, a TikTok-like platform, has gained viral popularity in recent months, attracting AI enthusiasts eager for invite codes. Unlike traditional social media platforms, all content on Sora is fully AI-generated. Experts describe it as an “AI deepfake fever dream,” seemingly harmless at first but carrying potential risks.

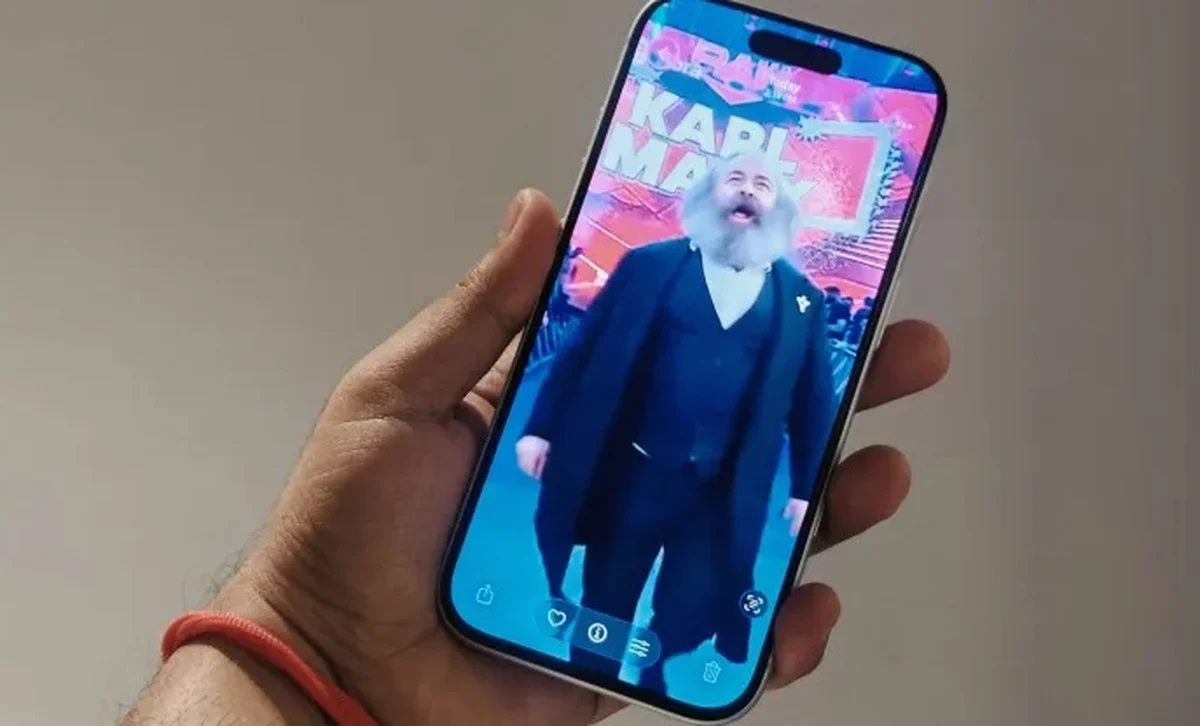

Technically, Sora videos surpass competitors such as Midjourney V1 and Google’s Veo 3 in resolution, audio synchronization, and creative output. Its most popular “Cameo” feature allows users to insert other people’s likenesses into AI-generated scenes, producing startlingly realistic videos.

The realism has raised concerns about misinformation, public figure impersonation, and the spread of dangerous content. Organizations, including SAG-AFTRA, have urged OpenAI to implement stricter safeguards.

Identifying AI-generated content

Experts advise several methods for spotting Sora videos, though none are foolproof. One key indicator is the Sora watermark: downloaded videos display a white cloud logo moving along the edges, similar to TikTok watermarks. Watermarks signal AI creation but can be cropped or removed with third-party apps, reducing reliability.

OpenAI CEO Sam Altman emphasized the need for society to adapt to a world where anyone can produce realistic fake videos, highlighting the importance of additional verification methods.

Metadata verification

Metadata, automatically attached to digital content, can reveal whether a video is AI-generated. Sora videos include C2PA metadata, part of the Content Authenticity Initiative (CAI), which can be verified through OpenAI’s online tool.

To check a video’s metadata:

- Visit https://verify.contentauthenticity.org/

- Upload the file

- Click “Open”

- Review the content summary for AI origin indicators

Verified Sora videos indicate they were “issued by OpenAI” and confirm AI creation. Experts note, however, that metadata may be altered if videos are edited or watermarks removed, reducing detection accuracy.

Platforms including Meta, TikTok, and YouTube now implement AI labeling systems to flag content, though these are not always comprehensive. The most reliable method remains creator disclosure; captions or credits noting AI generation provide transparency for viewers.

Experts warn that no single method guarantees the identification of AI videos. Viewers should critically assess content and watch for anomalies such as distorted text, disappearing objects, or impossible physics. Even experienced professionals can be misled, making scrutiny essential.

As AI platforms like Sora blur the line between reality and fiction, experts stress the collective responsibility of content creators and viewers to ensure transparency and limit misinformation.