27/01/2026

27/01/2026

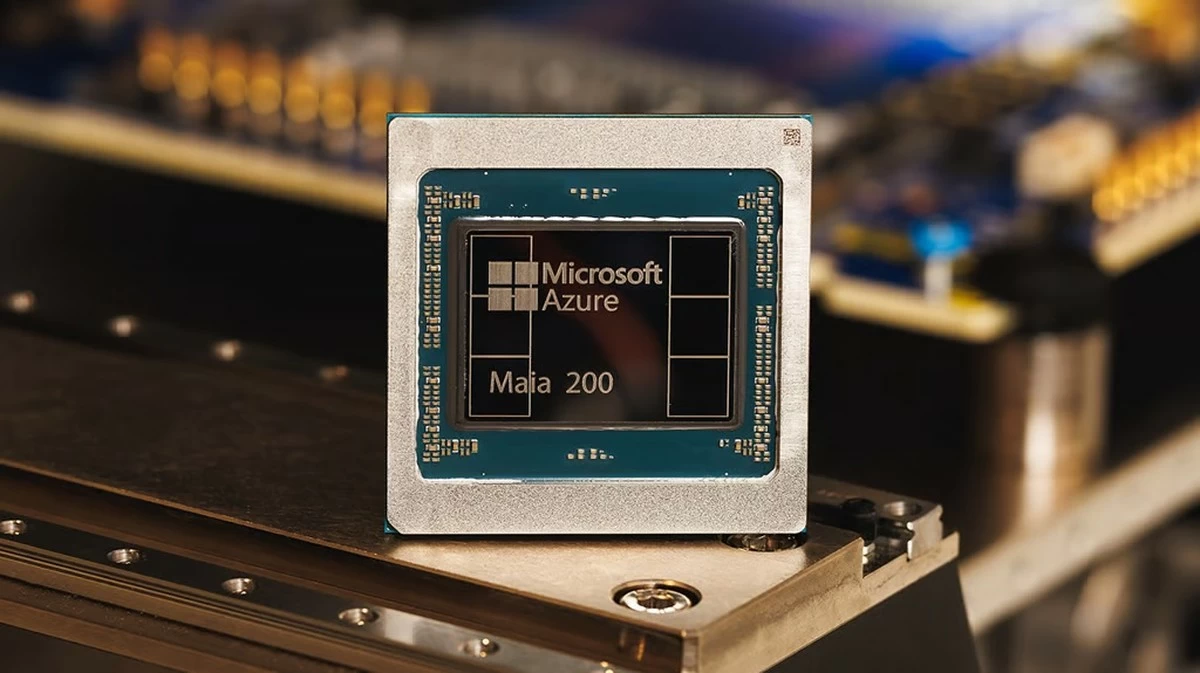

NEW YORK, Jan 27: Microsofton Monday unveiled its next-generation AI chip, Maia 200, designed to power large-scale AI workloads in the company’s data centers and eventually be offered to external customers, marking a strategic move to compete with Amazon and Google in cloud-based artificial intelligence.

The Maia 200 follows Microsoft’s Maia 100 chip, released in 2023, and is built using Taiwan Semiconductor Manufacturing Co.’s (TSMC) 3-nanometer process. The chip is designed to deliver high-performance AI inference while “efficiently maximizing performance per dollar,” Microsoft said. Each server rack will hold trays with four Maia 200 chips, which can be installed and running AI models within days of delivery.

By developing its own AI processors, Microsoft reduces reliance on third-party chips from Nvidia and AMD. While Google and Amazon have been using custom chips for years, Microsoft’s entry into in-house AI silicon comes as cloud providers increasingly seek to optimize AI workloads and lower operating costs.

The Maia 200, equipped with more than 100 billion transistors, delivers over 10 petaflops in 4-bit precision and roughly 5 petaflops in 8-bit precision. Microsoft said the chip offers enhanced high-bandwidth memory, boosting performance for large AI models. The company claims the Maia 200 outperforms Google’s seventh-generation TPU and Amazon’s latest Trainium chip in key metrics, including FP4 and FP8 performance.

“From a practical standpoint, one Maia 200 node can effortlessly run today’s largest AI models, with plenty of headroom for even bigger models in the future,” Microsoft said. The chip is already powering AI models from Microsoft’s Superintelligence team and supporting operations of its Copilot chatbot.

The launch comes amid growing competition for Nvidia, whose GPUs remain highly sought after for multipurpose AI workloads. While Microsoft, Google, and Amazon’s custom chips support their own services, analysts note they are unlikely to fully replace Nvidia for smaller third-party customers.

The Maia 200 is part of a broader trend of tech companies designing their own processors to reduce dependency on Nvidia. Google’s TPUs and Amazon’s Trainium chips serve similar purposes, offering AI compute power without relying on external GPU suppliers.

Microsoft has also invited developers, researchers, and AI labs to test the Maia 200 software development kit in their workloads, signaling an effort to expand adoption beyond its internal data centers.